LinkedIn has responsibility for a billion global users across a massive hardware estate at a time of rising cybersecurity threats and vulnerabilities. It needs a truly effective vulnerability management system to prevent disaster.

The organization decided to streamline and maximize its system to better protect its user base and itself. It decided to harness the power of AI to do so. With no off-the-shelf, tailored, and suitable AI application, it chose to take the DIY route – and SecurityWeek spoke to VP and head of productivity engineering, Sabry Tozin, to understand the process of developing a new platform: the Security Posture Platform (SPP) AI project.

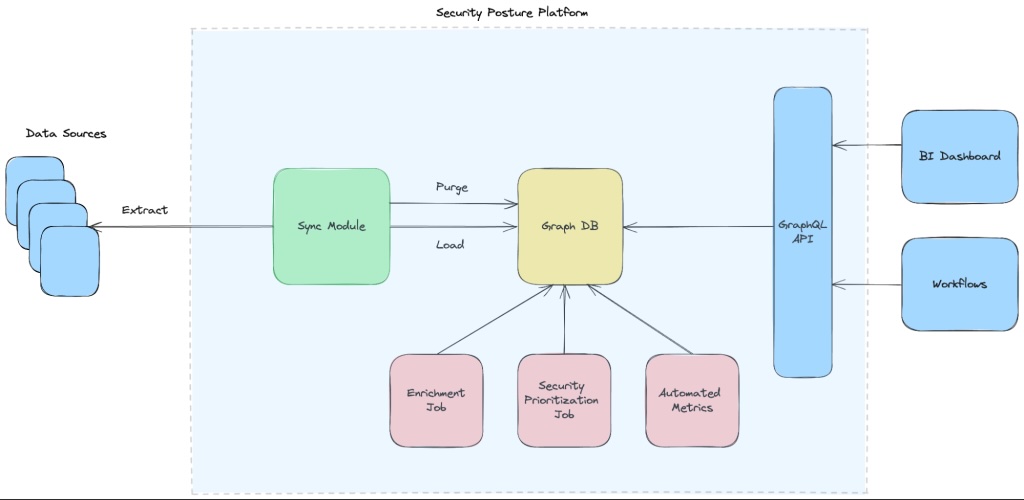

The first problem was to create a single source of truth for the entire hardware ecosphere. “If you have multiple sources querying the same info, you no longer have a source of truth,” explained Tozin. “So, the first step was to say, ‘Okay, from all these different sources I’m going to pour all the data into a single knowledge graph that will become my single source of truth informing me about all my assets and their relationships.’”

This is the Security Knowledge Graph – a real-time repository of data for all digital assets providing the information necessary to identify vulnerabilities and predict attack paths.

The second problem is navigating the graph to find and then mitigate vulnerabilities. This is the gen-AI side of SPP AI. Admittedly, LinkedIn’s relationship with Microsoft, and Microsoft’s relationship with OpenAI provides access to tailored gen-AI models; but there are dozens of different models available for organizations to download. A problem in DIY app development would be choosing the right model for the task in hand – but this was not a problem for LinkedIn.

The next stage was to maximize the efficiency of mapping security engineers’ queries to the knowledge graph to get the right answers. Part of the solution was to use a GraphQL API rather than a RESTful API, which, says LinkedIn, “gives our users the flexibility to traverse different nodes and relationships, crafting the most efficient queries relevant to their use cases.”

The second part of the solution lies within the graph itself: functions are mapped to node types, allowing the model to choose the most relevant node that aligns with the query context from the list of available nodes.

Of course, this is only part of the project architecture designed to give engineers rapid access to accurate answers. Other elements include prompt and error handling (automatically refining prompts based on evolving context and user needs); a fallback mechanism in case the original query doesn’t produce adequate results (preparing secondary queries); learning from past queries (to enrich future queries in the same context); and more.

Advertisement. Scroll to continue reading.

Credential theft and misuse is perhaps one of security’s biggest problems. AI-based access for bad actors to locate and understand potential LinkedIn vulnerabilities would be a huge threat – but Tozin points out that SPP AI is a closed system that can be used by only a small subset of the internal security team: the API cannot be accessed by just anyone and it can be closely controlled and monitored. Beyond this, the queries themselves are subject to anomaly detection. The style of natural language queries used by the different legitimate users is understood by the system and any bad actor trying to query SPP AI is likely to be detected.

It is also worth noting Tozin’s philosophy of AI. “The purpose of AI is not to reduce a team of 100 engineers to 50 engineers, but to make 100 engineers as productive as 200 engineers,” he said. AI is a tool that helps provide better insights, faster. But it provides those insights to humans – it does not replace them. “Everything here is verified by humans,” he said.

The proof of any app is in its outcomes. The project started three generations of GPT ago, with Davinci. “Early on,” says LinkedIn, “we were seeing about 40%-50% accuracy in our ‘blind tests’. In the current generation of GPT-4 models we’re seeing 85%-90% accuracy.”

Each new generation requires fine tuning to achieve maximum performance. But this fine tuning also reduces hallucinations. Here, a hallucination is not some made-up fairytale produced by gen-AI because it doesn’t have an answer to the question, but a misinterpretation of the data it does have.

“It’s really about refining answers and training the data over time,” said Tozin. “When you’re trying the new model and querying it and getting answers, the operator (remember, everything is verified by humans) may say, ‘That’s not right – it says this laptop hasn’t been patched, but I know it has’. So, tuning the new model is a question of understanding where and why the error was made – and fixing it.” A hallucination is an error of interpretation that can be corrected in DIY AI because you own the entire project: in this case, the knowledge graph, the gen-AI model, and the prompts.

The result for LinkedIn is a vulnerability management system that allows the security team to examine a single source of truth for the entire real time real estate and get an immediate natural language response to questions like, “Are we affected by vulnerability X?”, “What is the highest risk vulnerability on my devices?”, and “What services are running on host X and who are the owners?”

LinkedIn has followed the adage: if you want it done well, do it yourself. LinkedIn’s Sagar Shah and Amir Jalali have published a blog with more details on the SPP AI project.